ChatGPT Plugins Are Open for Business

OpenAI deepens its moat despite skepticism

ChatGPT Plugins and Browser are now in beta. They will be rolled out to all premium users over the next week. I personally just got access to Plugins.

In this article:

What are plugins, how do they work, and how you can use them for real-world tasks.

How the Plugins release fits with recent AI developments and OpenAI’s strategy.

The next revolution in software usage and distribution.

Does OpenAI still have a moat?

Speculations on OpenAI’s next moves.

Contemporary History

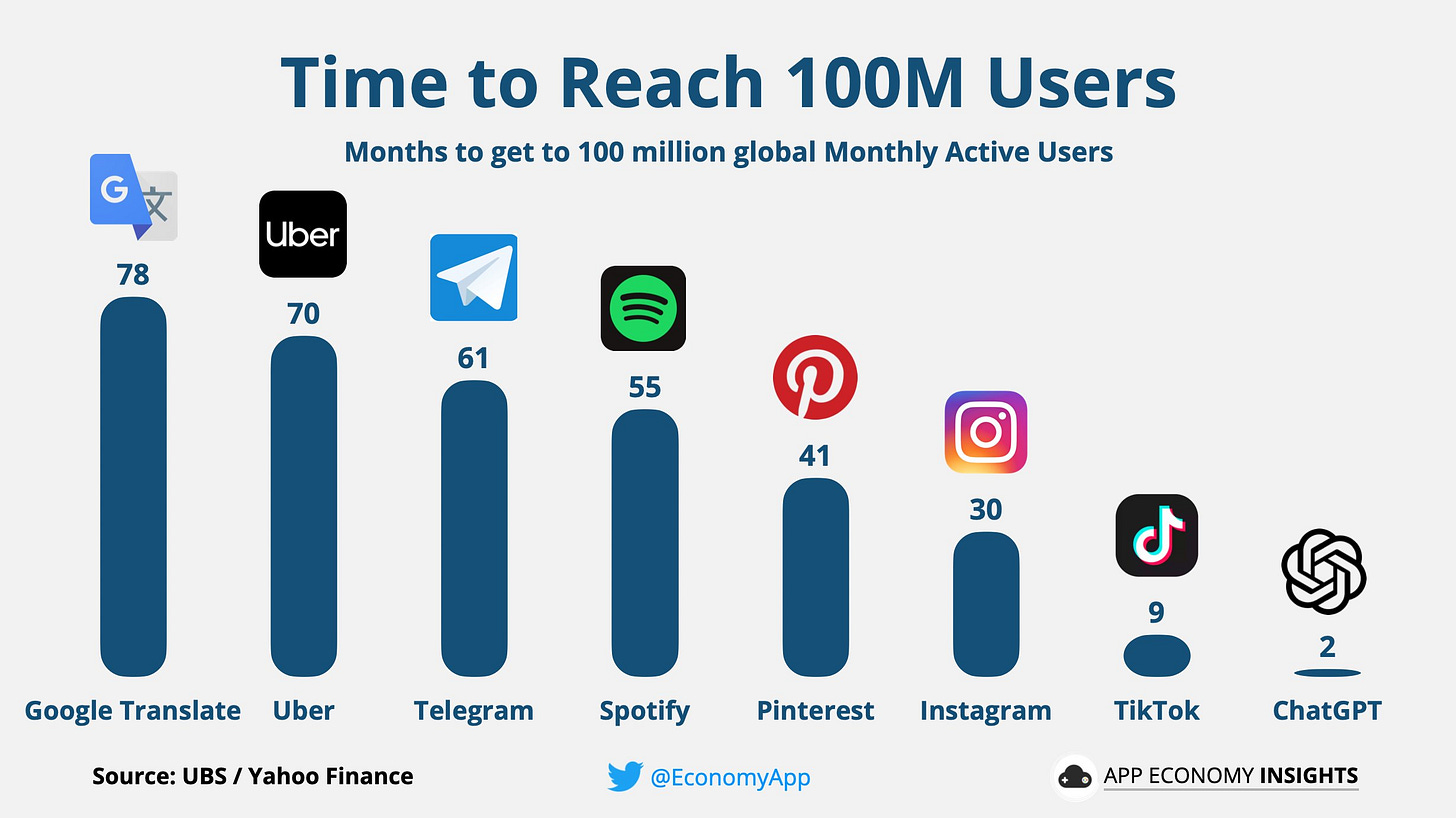

A million years ago (actually 6 months ago) OpenAI was shocked to see ChatGPT, an unassuming "research preview", turn into the fastest-growing consumer application in history: 100 million monthly active users after 2 months. They weren’t even trying.

Since then, OpenAI has:

Struck a $10 billion partnership with Microsoft

Released GPT-4, likely the largest AI model ever trained, with “sparks of AGI” capabilities

Declared the end of the age of large models (‘ages’ last weeks in the AI world)

The success of ChatGPT triggered an astonishing (and frankly worrying) arms race among Big Tech players to train and deploy Large Language Models. The open-source world has been no less enterprising, releasing a staggering amount of custom models and applications mostly built on top of Meta’s leaked LLaMA model.

Now OpenAI is making the next move by opening up Plugins, a web browser, and a code interpreter.

If like me you just got access to plugins (or hopefully will soon) you might wonder how they work and what you can do with them. And if you still haven’t started on ChatGPT’s premium plan, now may be a good time to reconsider.

How Do Plugins Work?

With Plugins, OpenAI is reinventing the App Store for the LLM age. To understand how a plugin works, let’s look at it from three perspectives: the user, the developer, and ChatGPT.

As the user:

I can browse through a library of plugins that do a variety of different tasks.

I can activate up to 3 plugins at the same time for a given chat.

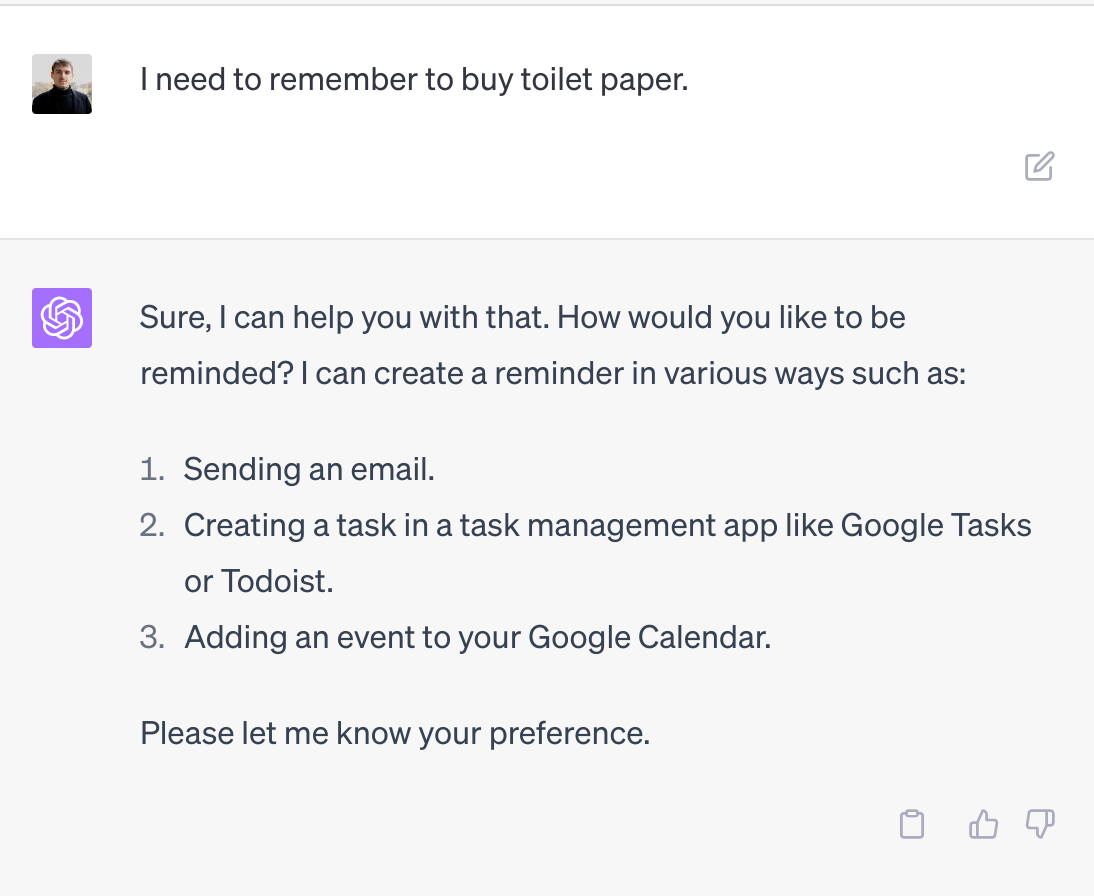

Let’s say I activated a plugin that connects to my calendar. I will tell ChatGPT what I need: “Help me plan a birthday party”. If Chat-GPT decides that my query requires a plugin, it will activate it, run it and then tell me what it has done. “I have added an event for the birthday party on your calendar.”

If I want, I can inspect the request that ChatGPT sent through the plugin and the response it got. This is a good implementation of the “show your work” principle.

As the developer:

I build or expose an API that I want ChatGPT to use.

I write a manifest file that tells ChatGPT (in plain English) how it can use my API endpoints to get stuff done.

I submit my plugin to OpenAI. Once they authorize it, it will appear in the plugin store. Users will be able to activate and use it.

Interestingly, I can deploy and test plugins locally without limits. And I can share my unauthorized plugins with up to 15 plugin developers. So even if OpenAI doesn’t authorize my plugin, I can still get utility out of it. This seems like a big deal to me.

Note: Not everyone can develop plugins at the time of writing. To become a “plugin developer” you have to join the waitlist and select the option: “I am a developer and want to build a plugin”.

As ChatGPT:

When the conversation starts, I am made aware of up to 3 plugins that I can use to fulfill the user’s query.

If the user’s query requires it, I will make a request to a plugin’s API endpoints. Once I get a response, I will parse it and write a relevant message to the user.

Plugins can be as simple as getting a weather report from the web, which requires no authorization flow. They can also be personal to the user. For example, the Zapier plugin lets me log in with my account, and then I can use it to send emails or add events to my calendar.

What Can I Do With Plugins?

I only received plugin access a few hours ago, so I will write my first impressions.

The number of plugins in the Plugin Store is relatively small: I counted 70. Clearly, OpenAI is vetting every single plugin and giving priority to its partners and big companies, although the plan is to scale it to thousands or millions of plugins.

Here are the ones I tried first:

WolframAlpha. This seemed like a big deal when it was released. WolframAlpha is a “computational knowledge engine” that knows a lot of facts and is very good at algorithms and computation. Stephen Wolfram promises this will give GPT the computational abilities it lacks. In my brief trial, it was a bit underwhelming. I had to explicitly encourage ChatGPT to use it, instead of running its own calculations. GPT and the Wolfram interpreter don’t understand each other very well, and Wolfram often fails to return a result. Maybe it will prove itself in time1.

AskYourPDF and ChatWithPDF. I tried to use these to explore some arxiv.org papers on AI. When using ChatWithPDF, GPT completely hallucinated the papers, not sure what went wrong there. AskYourPDF seems to work pretty well. ChatGPT sends a query to a backend engine that returns a list of text snippets. At the end of the summary, ChatGPT references the pages it checked, which is nice. I look forward to using this for skimming papers.

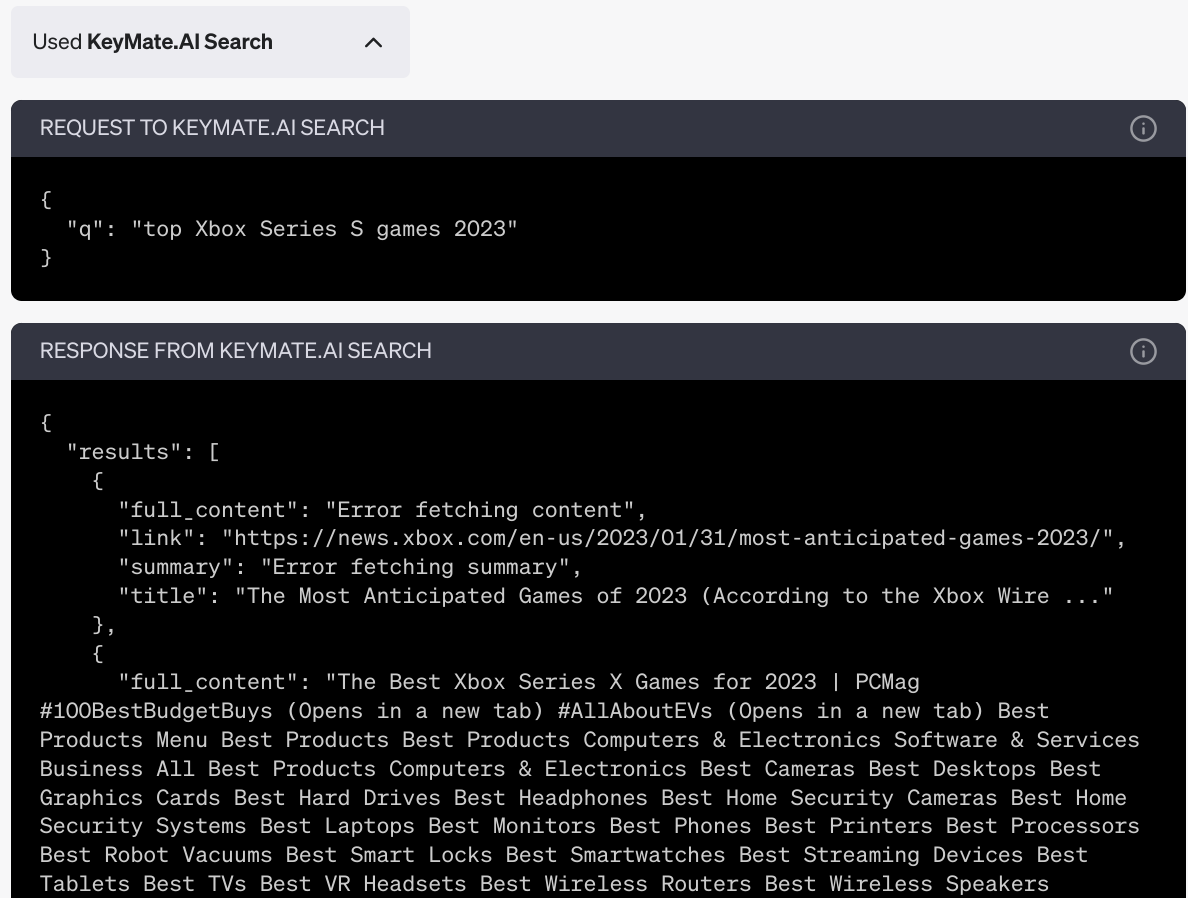

KeyMate Search. Since I don’t have the official browser yet, this plugin allows ChatGPT to browse the web for me. Very nice.

Diagram It. They stole my idea! I also wanted to build a plugin that would use Mermaid to display flowcharts and other graphs. This is clearly a low-hanging fruit and I’m glad it’s out there. Unfortunately, GPT often messes up the Mermaid syntax and the chart fails to show.

Zapier: One Plugin to Rule Them All?

There is one plugin that towers above all others, and that is Zapier.

Zapier is an “API glue” service that allows you to connect APIs and automate workflows. It claims to connect over 5000 apps. In theory, through Zapier you could get GPT to interact with all these apps. If this works well, you could use a single plugin for most of your needs, instead of picking 3 singular plugins every time.

How well does it work in practice?

I installed the Zapier plugin and got access to a page where I can review my OpenAI <> Zapier integrations. I added the following ones:

Create a task in my Google Tasks

Add or update an event on my Google Calendar

Create a draft in my Gmail

GPT became aware of these integrations.

I then asked to add a task, put a reminder on my calendar for tomorrow, and draft an email letting my girlfriend know that I’d handle it. Everything worked as expected!

Each time I had to open the Zapier website to review and confirm the task. This is a smart safety feature, unfortunately it is also very annoying, I wish I could disable it for some tasks.

Here are a few more ideas for what GPT could do via Zapier:

post on social media

send a Slack message

open a pull request on GitHub

create tasks on Jira or Trello

create a Stripe payment link

create a Spotify playlist

write a presentation on Google Slides

upload a file to AWS S3.

These features seem potentially very useful, and I'm surprised more people haven't been talking about this.

The Promise of Plugins: Interface and Integration

At the time of writing, plugins are still raw. ChatGPT doesn’t always understand when it should use them. Errors and empty responses are fairly common. There are less than 100 official plugins.

Still, the promise is huge. The best thing the iPhone ever did was to enable the App Store, a platform for millions of apps.

The App Store put useful software in the hands of billions. Now, OpenAI aims to take software distribution to the next era. This coming revolution will be driven by two features:

Natural language interface. Soon, everyone will be able to use software by simply talking to it. The barriers to usage are converging to zero.

Integrating and orchestrating applications. This is, in my opinion, Generative AI’s strongest proposal on the user side. On your smartphone, you are always using one app at a time. If two apps want to connect, the developers must build an integration2. In contrast, GPT can seamlessly and intelligently orchestrate plugins according to the needs of the moment.

Imagine you haven’t been feeling well lately, and you want to improve your diet. GPT can analyze your situation, make some recommendations based on a web search, create a spreadsheet in your Google Drive with a diet plan, make a list of groceries to order on Instacart, and write a message to your family with the dishes that you plan to cook. All this without leaving the chat interface. In some ways, this is much better than having a dedicated app. There is simply no way to orchestrate and integrate all these functions on-demand within the current paradigm.

Orchestration and integration are clearly top of mind for OpenAI, as evidenced in their explanation of which plugins qualify as “magical” (bold is mine):

Retrieval over user-specific or otherwise hard-to-search knowledge sources (searching over Slack, searching a user’s docs or another proprietary database).

Plugins that synergize well with other plugins (asking the model to plan a weekend for you, and having the model blend usage of flight/hotel search with dinner reservation search).

Plugins that give the model computational abilities (Wolfram, OpenAI Code Interpreter, etc).

Plugins that introduce new ways of using ChatGPT, like games.

The magic of GPT is that it can enable countless applications to interact dynamically without needing developers and product managers to engineer the usage patterns and necessary integrations.

Risks and Dangers

While this article focuses on real-world utility, it is worth saying that plugins are also bringing on new risks. We are enthusiastically giving unrestrained API access to AI models that are fundamentally misaligned and might one day cause human extinction. GPT-4 is probably not a direct existential threat. If the race to improve AI capabilities would stop here, we could leverage the fruits of these innovations and enjoy a sweet and fruitful AI summer.

I have little hope of this happening, so the trend of “let’s uncritically connect our largest AI models to every API out there” is a worrisome one.

There are also more mundane risks related to security, privacy, and malicious actors exploiting plugins with jailbreaks and prompt injections. I am moderately confident that we can adapt and learn to manage these risks over time, like we did with the cybersecurity risks that emerged with the invention of the web.

Does OpenAI Still Have A Moat?

Last week the web was abuzz with a purportedly leaked memo from a Google employee: We have no moat, and neither does OpenAI.

I recommend reading it as an overview of the incredible achievements of the open-source community over the past few months.

I disagree with the memo’s fundamental thesis, despite the endless legions of web influencers pushing it as gospel. OpenAI keeps its moat, at least for now. The moat consists of a few elements:

GPT-4 is still the smartest and most capable model, with Google’s PaLM 2 and Anthropic’s Claude in hot pursuit.

ChatGPT is still the best interface for leveraging LLM technology. It’s not perfect, but it’s generally helpful and reliable. It does what I need it to do3.

OpenAI is introducing novel applications on top of ChatGPT that many people really want: the browser, the code interpreter, and plugins4.

If they keep the momentum going, I predict that 6 months from now we will agree that OpenAI still has a moat.

What’s Next?

OpenAI has a few more tricks up its sleeve. One of them is GPT-4’s multimodal capabilities. Remember the demo from a thousand years ago (actually mid-March) where Brockman sketched a website on a napkin, GPT saw it, and made it into a website?

So much has happened in the past weeks, it is easy to forget that OpenAI hasn’t yet mentioned any plans for giving ChatGPT the ability to see, although this is fully within their capabilities.

I might be wrong, but I predict this next feature is not far off. I posted a speculative prediction on Reddit today.

OpenAI is developing a phone app for ChatGPT and image upload will be the killer feature.

After all, a lot of the personal value will come from snapping a picture and instantly sharing it with ChatGPT. (The other killer feature will be voice prompting with Whisper.)

Imagine taking a photo of any of these things and having GPT work on it: a paper form, your fridge, your wardrobe, a bus schedule, an error message, a book/kindle page, an instruction manual, a product's ingredients, a skin mark you're worried about, a dish, a chat screenshot, a stain you need to clean, a receipt. I could go on and on.

They created their version of the app store. A phone app with image/audio inputs and killer Integrations is the next logical step for entrenching their moat.

A nice bonus is that thanks to Wolfram, GPT finally knows what day it is!

Alternatively, the the apps can communicate through a shared protocol like REST, but this also requires work from the developers.

Compare this with Bing Chat. Bing provides GPT-4 for free. It is available as a phone app. It had browsing capabilities since the beginning. It can see the web page or PDF you’re on. It can use DALL-E to generate images for you, for free. Now they’re even adding plugins. By all accounts, Bing should be the undisputed market leader. Yet I and most people I know hate using Bing for tasks that have actual utility and would much rather pay for ChatGPT Plus. The horrible interface, the constant barrage of confusing changes and features, and Sydney/Bing’s bipolar and paranoid attitude (sometimes charming, but often frustrating!) make it very hard to do real work with Bing. The lesson here is that interface matters. If your interface sucks, having the biggest firepower and the most features will not suffice.

On the other hand, the essential moat element that OpenAI is missing is personal context. Once I get an assistant that can see my emails, documents, social media profiles, to-do lists, and so on, I expect it will be an order of magnitude more helpful than an assistant that knows nothing about me (assuming that privacy and security are derisked). This is where Google and Microsoft have a chance to take the upper hand. However, this is yet another argument against the thesis that big companies have no moat.