GPT Prompt Compression: A Cheap and Simple Solution

Save Tokens, Pay Less And Fit More Data to GPT

In this article, I will show you how to feed more data to GPT, whether you are using ChatGPT, Bing, or the OpenAI APIs. I explain a few simple techniques that you can use to compress your prompt without losing too much information.

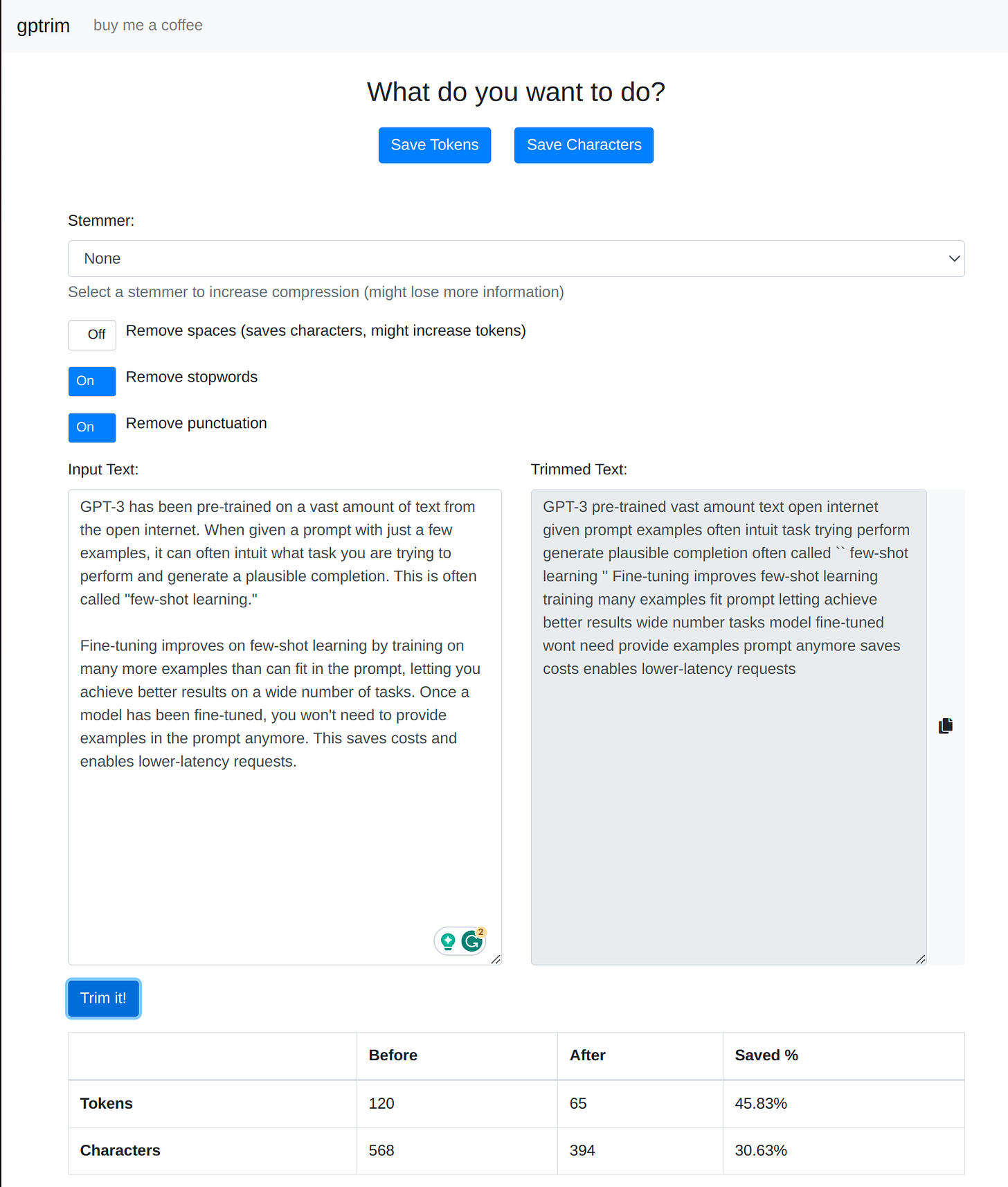

I also introduce gptrim.com, a free web tool that will help you compress your prompts.

The problem

GPT has read the entire internet, but it cannot read the novel in your drawer.

The biggest limit on using GPT is its context window, which is the maximum number of tokens (words or word pieces) it can process in a single pass. Working with a small context window can be challenging when you want to input large amounts of text.

One way to overcome this issue is to apply fine-tuning to create a custom model, but that’s an expensive process that requires some technical knowledge.

Another approach is to find ways to compress your prompt, which is the input text you provide to the AI model. By reducing the size of your prompt, you can fit more data within the context window. If you are using the API this will also save you money, since you are charged based on the number of tokens that you use.

This article will explore how you can compress your prompt without sacrificing too much information, enabling you to make better use of GPT's capabilities and feed more data into the model.

Token vs. character limits

There are two main limitations when it comes to feeding data to GPT: character size and token size. The character size limitation is more of a user interface (UI) problem, while the token size limitation is a fundamental problem related to the AI model itself.

Character size refers to the maximum number of characters that can fit in a chat message or input box in interfaces like ChatGPT or Bing. This limitation is relatively easy to get around.

Token size, on the other hand, is more complex. Tokens are the units of text that GPT works with. They can be as short as a single character or as long as a word, depending on the language and context. The token limit, or "window size," is the maximum number of tokens GPT can see at any one time.

For example, if you have a conversation with ChatGPT and the text of the conversation goes over the token limit, ChatGPT will gradually forget the start of the conversation as the context window slides forward.

How to count tokens

When working with GPT, counting tokens is important for two reasons: first, you need to ensure that your input fits within the context window; second, it allows you to predict and control costs.

Fitting within the window size: Knowing the number of tokens in your input text helps you manage the context window effectively. By staying within the token limit, you can ensure that GPT processes your entire input without truncating or losing any information.

Predicting and controlling costs: Most AI platforms, like OpenAI, charge users based on the number of tokens processed during API calls. Keeping track of token usage helps you estimate and manage the costs associated with the service. You can read OpenAI’s pricing policy here.

How do you know how many tokens your text has? One way is to paste your text into OpenAI’s web tokenizer. Token counting is also available in my free web tool, gptrim.com. Behind the scenes, I used the Python library tiktoken for this functionality.

Here are the token limits for the current models:

At the time of writing, ChatGPT has a maximum window of about 4,000 tokens, corresponding roughly to 3,000 words.

When it comes to the API, the gpt-3.5-turbo model also has a 4,000 tokens limit.

The gpt-4 model comes with a window of either 8,000 tokens or 32,000 tokens, the latter being twice as expensive. At the time of writing, most users are still on the waitlist.

How to reduce character count

When working with a UI-based application like ChatGPT or Bing, there is a limit on how many characters you can fit in a single message before you get an error.

At the time of writing, Bing has a 2,000 character limit per message, but there is an easy hack to increase it by tweaking the web code from the browser’s developer tools. You can also use Microsoft Edge’s sidebar feature to get Bing to read the page you are on, sparing you the work of pasting text in the chat.

ChatGPT also has a 2,000 character limit, but I’m not aware of any hacks to extend it.

The main insight is that you can significantly limit your character count by trimming down parts of text like spaces, stopwords and punctuation. You can also apply stemming algorithms that reduce words to common roots. The result will be almost unreadable by humans, but GPT can understand it pretty well.

Consider this text snippet (source):

“But don’t humans also have genuinely original ideas?” Come on, read a fantasy book. It’s either a Tolkien clone, or it’s A Song Of Ice And Fire. Tolkien was a professor of Anglo-Saxon language and culture; no secret where he got his inspiration. A Song Of Ice And Fire is just War Of The Roses with dragons. Lannister and Stark are just Lancaster and York, the map of Westeros is just Britain (minus Scotland) with an upside down-Ireland stuck to the bottom of it – wake up, sheeple! Dullards blend Tolkien into a slurry and shape it into another Tolkien-clone. Tolkien-level artistic geniuses blend human experience, history, and the artistic corpus into a slurry and form it into an entirely new genre. Again, the difference is how finely you blend and what spices you add to the slurry.

If you apply the above transformations, you would get this:

“donthumanalsogenuinoriginidea?”Come,readfantasibook.eitherTolkienclone,SongIceFire.TolkienprofessorAnglo-Saxonlanguagcultur;nosecretgotinspir.SongIceFireWarRosedragon.LannistStarkLancastYork,mapWesteroBritain(minusScotland)upsiddown-irelandstuckbottom–wake,sheepl!DullardblendTolkienslurrishapeanothtolkien-clon.tolkien-levelartistgeniusblendhumanexperi,histori,artistcorpusslurriformentirnewgenr.,differfineblendspiceaddslurri.

This allows you to save 46% of characters, almost doubling your capacity. It might seem incomprehensible to you but surprisingly, GPT still understands it. You can verify it with this prompt.

This is an instance of compressed text. Rewrite it so that it has perfect grammar and is understandable by a human. Try to interpret it as faithfully as possible. Do not paraphrase or add anything to the text.

Here’s what we get for our example:

Do humans also genuinely originate ideas? Come and read fantasy books, for example, either a Tolkien clone or A Song of Ice and Fire. Tolkien was a professor of Anglo-Saxon language and culture; it's no secret that he got inspiration from it. A Song of Ice and Fire was inspired by the War of the Roses and dragons. The Lannisters and Starks can be compared to the Lancasters and Yorks, and the map of Westeros is similar to Britain (minus Scotland) turned upside down, with Ireland attached to the bottom. Wake up, people! Dullards blend Tolkien's work, creating a slurry and shaping it into another Tolkien-clone. A Tolkien-level artist or genius blends human experiences, history, and artistic works to create a slurry, which then forms an entirely new genre. This difference can be compared to a fine blend of spices added to a slurry.

I have bolded the parts where I felt GPT misunderstood the original text. I think this is still a pretty good result if you are okay with losing a bit of nuance. Also, I predict that misunderstandings will be less frequent the more context GPT has around the text.

You can use gptrim.com to apply the recommended transformations for saving characters. Just select “Save characters” at the top of the page. It will also tell you how many characters you’ve saved.

How to reduce token count

If we want to limit the token count, we need a different approach. Counterintuitively, removing spaces from a text might actually increase tokens, because many tokens are padded with spaces1.

The best recipe I could find for reducing tokens is to remove stopwords and punctuation. If you apply this to our example, you get the following:

“ dont humans also genuinely original ideas ” Come read fantasy book either Tolkien clone Song Ice Fire Tolkien professor Anglo-Saxon language culture no secret got inspiration Song Ice Fire War Roses dragons Lannister Stark Lancaster York map Westeros Britain ( minus Scotland ) upside down-Ireland stuck bottom – wake sheeple Dullards blend Tolkien slurry shape another Tolkien-clone Tolkien-level artistic geniuses blend human experience history artistic corpus slurry form entirely new genre difference finely blend spices add slurry

This version saves us 48% of tokens. Remember, when using the API, you are charged for every token: this simple transformation is almost cutting your costs in half!

But is the original meaning preserved? Decide for yourself:

"Don't humans also have genuinely original ideas?" When you read a fantasy book, it is often either a Tolkien clone or a Song of Ice and Fire derivative. Tolkien, a professor of Anglo-Saxon language and culture, did not hide the fact that he was inspired by the War of the Roses for his work. Similarly, Song of Ice and Fire features dragons and Lannister and Stark houses, which resemble Lancaster and York. The map of Westeros is essentially Britain (minus Scotland) upside down, with Ireland attached to the bottom. It's time to wake up, people! Many authors simply blend Tolkien's ideas into their own works, creating yet another Tolkien-clone. However, true artistic geniuses are able to blend human experiences, history, and the artistic corpus into a unique mixture, ultimately forming an entirely new genre. The difference lies in the skillful blending of spices added to the mix.

I found three misunderstandings: two minor, and one serious (Tolkien didn’t use the War of the Roses, Song of Ice and Fire did.) But the general message is preserved.

You can apply this recipe at gptrim.com. Just select “Save tokens” at the top of the page.

gptrim.com: a free web tool to compress your prompts

Based on these insights, I made gptrim.com (with a lot of help from ChatGPT) to help you compress your prompts.

To use gptrim, paste your original prompt into input textbox. Choose whether you want to save primarily on tokens or characters. Press “Trim it!” to get the result and copy it to your clipboard.

If you use this tool, I would love to hear your feedback!

Summary

By counting tokens and applying prompt compression techniques, you can fit more data within GPT's context window and reduce your costs.

If you use ChatGPT or Bing, you can also fit more text into a single chat message, saving you from tedious copy-pasting.

Finally, note that while compression techniques can help you work with GPT's limitations, there will be some loss of information involved. I encourage you to experiment with different strategies to find the best balance between compression and comprehension.

For example, “time” and “ time” are two different tokens. This is related to how the algorithm that created the tokens works, and it’s outside the scope of this article.